Managing Non-Deterministic AI: A C-Suite Production Guide

July 19, 2025 / Bryan ReynoldsThe Predictability Paradox: A C-Suite Guide to Managing Non-Deterministic AI in Production

An organization invests in a state-of-the-art AI system to automate customer support. On Monday, it provides a perfect, compliant answer to a complex query. On Tuesday, given the exact same query, it delivers a slightly different, less precise response. This is not a bug; it is a feature of modern artificial intelligence called non-determinism in AI, and it represents one of the most significant strategic hurdles to enterprise AI adoption. This variability challenges the very pillars of enterprise operations: consistency, reliability, and auditability. Unmanaged, this inherent unpredictability can translate from a technical curiosity into a direct business liability.

This guide serves to demystify non-deterministic AI for business leaders. It explores why this phenomenon matters to the bottom line, details its technical origins, and provides a robust, three-pillar framework for managing its risks and harnessing its power in production systems. The era of AI experimentation is over; succeeding in production requires a new level of operational discipline.

Demystifying the "Ghost in the Machine": What is Non-Deterministic AI?

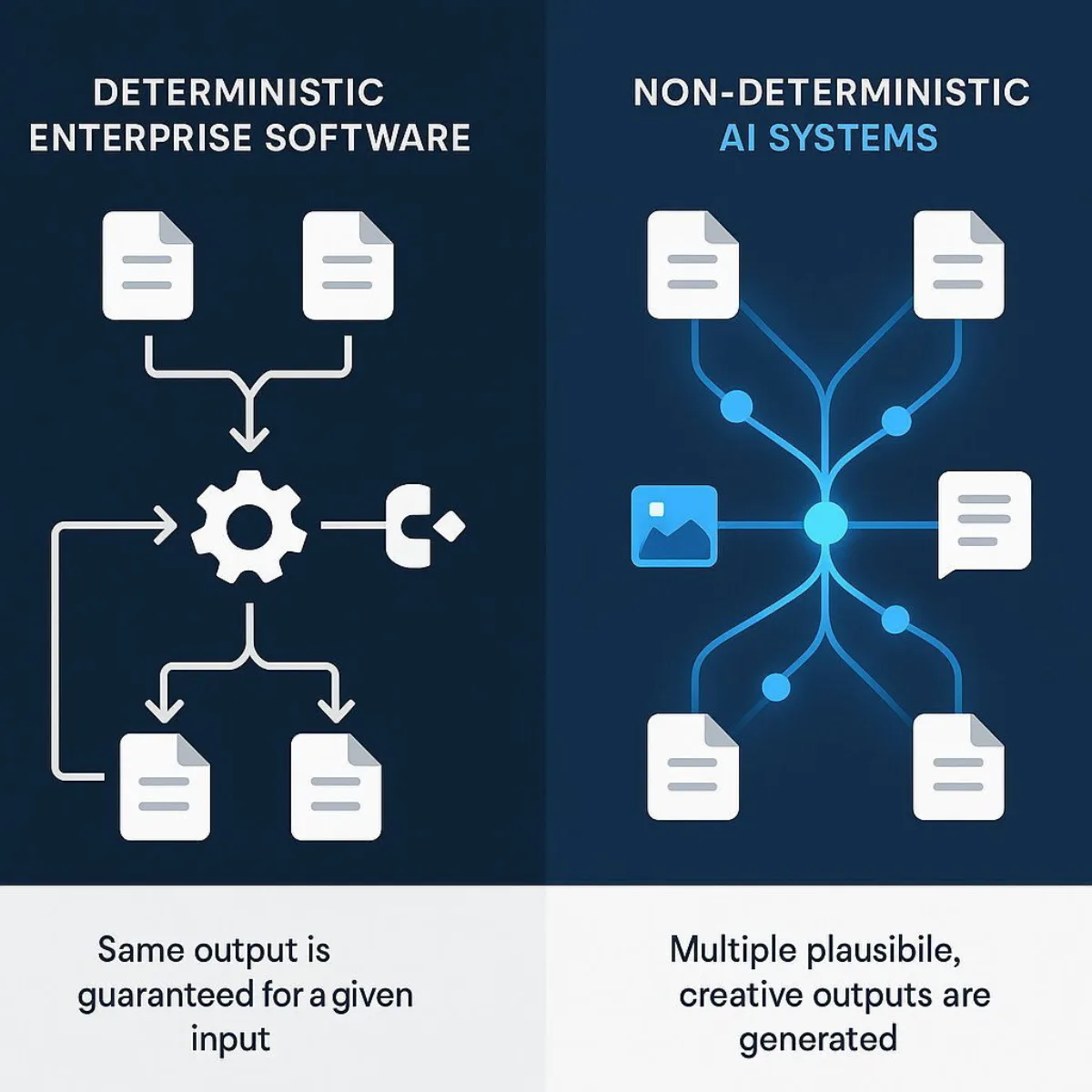

At its core, non-deterministic AI refers to a system that can produce different outputs even when given the identical input across multiple runs. This behavior stands in stark contrast to traditional deterministic software, where the same input always yields the same result, a foundational principle of enterprise computing for decades.

For many modern AI models, especially Large Language Models (LLMs) like those powering generative AI, this variability is an intentional design feature, not a flaw. It is the very source of their creativity, adaptability, and ability to generate diverse, human-like responses. This is what allows an AI to draft multiple distinct marketing slogans from a single prompt or summarize the same document in several unique ways. The conflict that arises is not technical but strategic. Businesses are built on the bedrock of predictable, repeatable processes, such as calculating payroll or processing an invoice. Generative AI, however, thrives on managed unpredictability. Integrating this technology is therefore not just a system upgrade but a clash of operational philosophies. An organization that attempts to force an LLM to be perfectly deterministic may stifle its core value and miss its transformative potential.

AI systems exist on a spectrum of determinism. Some algorithms, like Monte Carlo simulations used in financial modeling or genetic algorithms in optimization, are inherently probabilistic and non-deterministic by design. Others, like LLMs, have configurable parameters or "dials" that can be adjusted to make them more or less deterministic, though achieving perfect predictability is exceptionally challenging. This reality necessitates a shift in mindset. Instead of asking, "How do we eliminate randomness?" the more strategic question becomes, "How do we design a system that manages and channels this variability to achieve reliable business outcomes?".

The Bottom-Line Impact: Why Non-Determinism is a Boardroom Concern

The conversation about AI variability must move from the server room to the boardroom. Unmanaged non-determinism is not a form of technical debt to be addressed later; it is a present-day business liability with tangible consequences across industries.

In high-stakes sectors where precision is non-negotiable, the risks are particularly acute.

- Finance: The financial services industry depends on consistency for regulatory compliance and risk management. Non-deterministic AI can introduce fluctuating risk assessments for loans, unreliable compliance checks against sanctions lists, and inaccurate portfolio recommendations. The consequences are severe, with one study finding that the average short-term cumulative abnormal returns (CARs) loss for banks and other financial institutions after a public AI incident is a staggering -21.04%. Interested in how AI variability plays out in regulated industries? See our analysis of AI hallucinations in finance.

- Healthcare: In medicine, variability can be a matter of life and death. An AI-powered diagnostic tool that suggests different interpretations of the same medical scan on different days poses a critical patient safety risk. Such inconsistencies can lead to missed or incorrect diagnoses, patient harm, and immense legal liability. The "black box" nature of many models exacerbates this problem, making it difficult to trace the source of an error and determine accountability.

- Marketing & Brand Management: A brand's voice is a carefully curated asset that relies on consistency. Non-deterministic AI can generate off-brand, tonally inconsistent, or even factually incorrect marketing copy, diluting brand equity with every customer interaction. While some companies have cleverly used AI's quirks in controlled campaigns, such as Heinz generating images of its iconic ketchup bottle, these were deliberate strategies, not the result of unmanaged randomness.

Beyond these sector-specific examples, unmanaged non-determinism introduces universal business risks. It erodes customer and employee trust when user experiences are inconsistent. It breaks traditional software testing and quality assurance paradigms, which rely on predictable, repeatable outcomes, making bug reproduction and regression testing nearly impossible without a new framework. Finally, it creates operational instability, as downstream business processes that depend on a consistent AI output can fail unexpectedly.

The following table summarizes these industry-specific risks.

Table 1: Industry-Specific Risks of Unmanaged Non-Determinism

| Industry | Specific Risk Example | Potential Business Impact |

|---|---|---|

| Finance | An AI model provides different risk scores for the same loan application on consecutive days. | Regulatory fines for non-compliant lending decisions, financial losses from bad loans, erosion of client trust, and inability to audit decision-making. |

| Healthcare | An AI diagnostic tool highlights a potential tumor on a scan one day but fails to flag the same anomaly the next. | Patient harm from delayed or missed diagnosis, increased malpractice liability, loss of clinician confidence in AI tools, regulatory scrutiny. |

| Marketing & Advertising | An AI generates a witty, on-brand ad copy for a product, then later produces a generic, formal description for the same product. | Brand dilution and inconsistency, poor customer experience, wasted ad spend on ineffective creative, potential for off-brand messaging that damages reputation. |

Under the Hood: The Technical Drivers of AI Variability

To effectively manage a system, leaders need a foundational understanding of its inner workings. The variability in AI is not arbitrary; it stems from specific technical sources at different stages of the AI lifecycle.

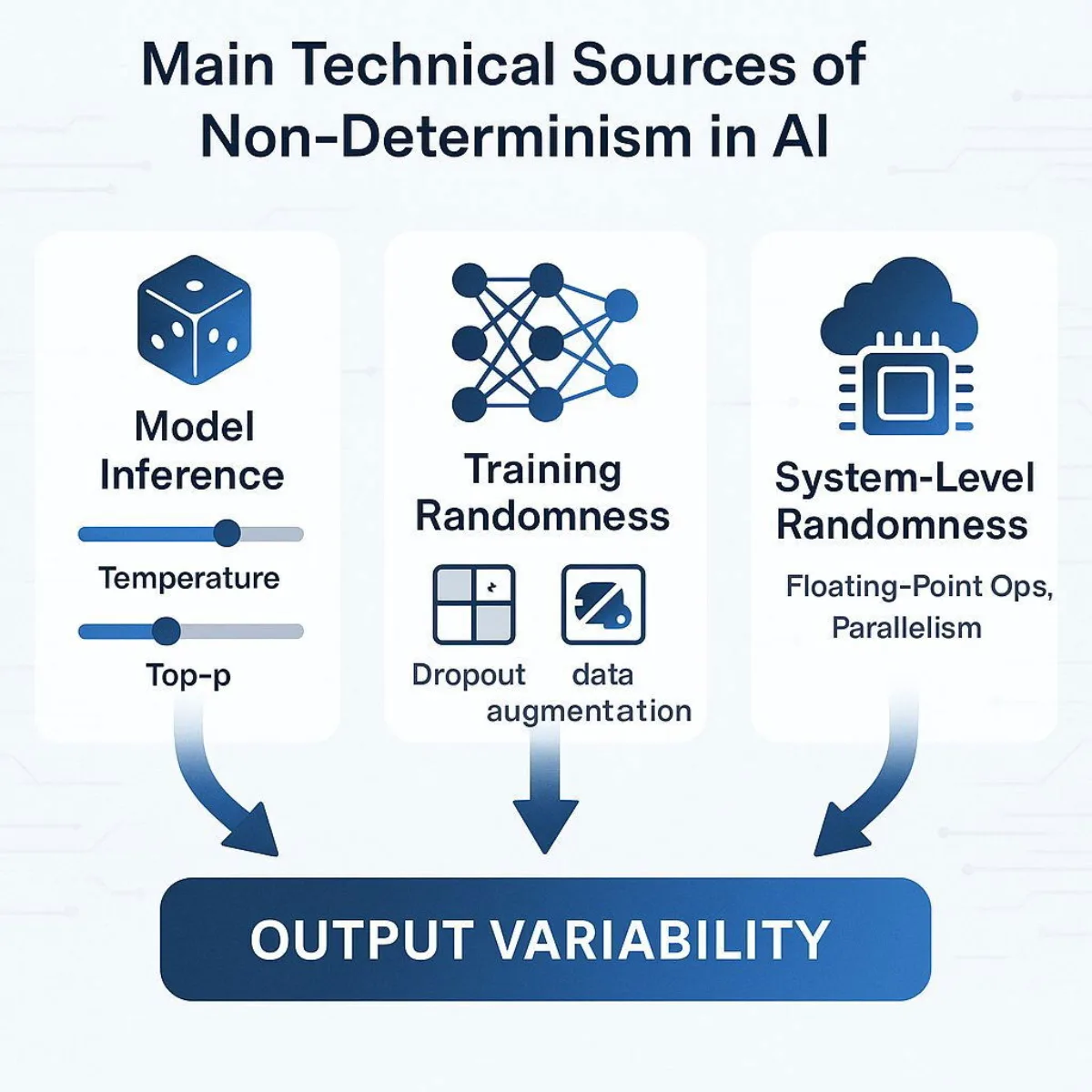

Source 1: The Model's Probabilistic Nature (Inference-Time Randomness)

LLMs operate by predicting the next most likely word, or "token," in a sequence based on the input they receive. They are not retrieving a stored answer; they are calculating a probability distribution over their entire vocabulary and then sampling from it. Several parameters control this sampling process:

- Temperature: This setting adjusts the randomness of the model's choices. A low temperature (e.g., 0.1) makes the model more conservative and deterministic, as it will almost always pick the single word with the highest probability. A high temperature (e.g., 0.9) increases randomness, allowing the model to select less likely words, which leads to more diverse and creative outputs.

- Top-p (Nucleus) Sampling: This technique provides another way to control randomness by limiting the pool of potential words. Instead of considering all possible words, the model only considers the smallest set of words whose cumulative probability exceeds a certain threshold (the "p" value). Even within this smaller, high-probability group, the model's final choice can vary from run to run.

Source 2: The Training Process (Training-Time Randomness)

Randomness is also intentionally introduced during the model's training phase to improve its robustness and prevent a problem known as "overfitting," where the model memorizes the training data instead of learning general patterns.

- Stochastic Elements: Techniques like dropout randomly ignore a fraction of the model's neurons during each training step, forcing the network to learn more resilient representations. Similarly, data augmentation involves applying random transformations to training data (e.g., rotating an image or rephrasing a sentence) to create new examples. Both of these widely used methods introduce a degree of randomness into the final trained model.

Source 3: The Computing Environment (System-Level Randomness)

Finally, variability can arise from the very hardware and software on which the AI runs.

- Hardware Differences (The GPU Effect): Modern AI models are trained and run on Graphics Processing Units (GPUs) that perform thousands of floating-point calculations in parallel. Due to the nature of parallel processing, the exact order in which these operations are completed can differ slightly between runs. These minuscule rounding errors, though individually insignificant, can accumulate and be amplified through the millions of calculations in a neural network, ultimately resulting in a different final output.

- The "Perfectly Deterministic" Myth: Because of these hardware-level effects and the mathematical possibility of two or more tokens having the exact same probability, achieving perfect, bit-for-bit reproducibility is exceptionally challenging. Even setting the temperature to zero and using a fixed random seed is often not enough to guarantee an identical output every time.

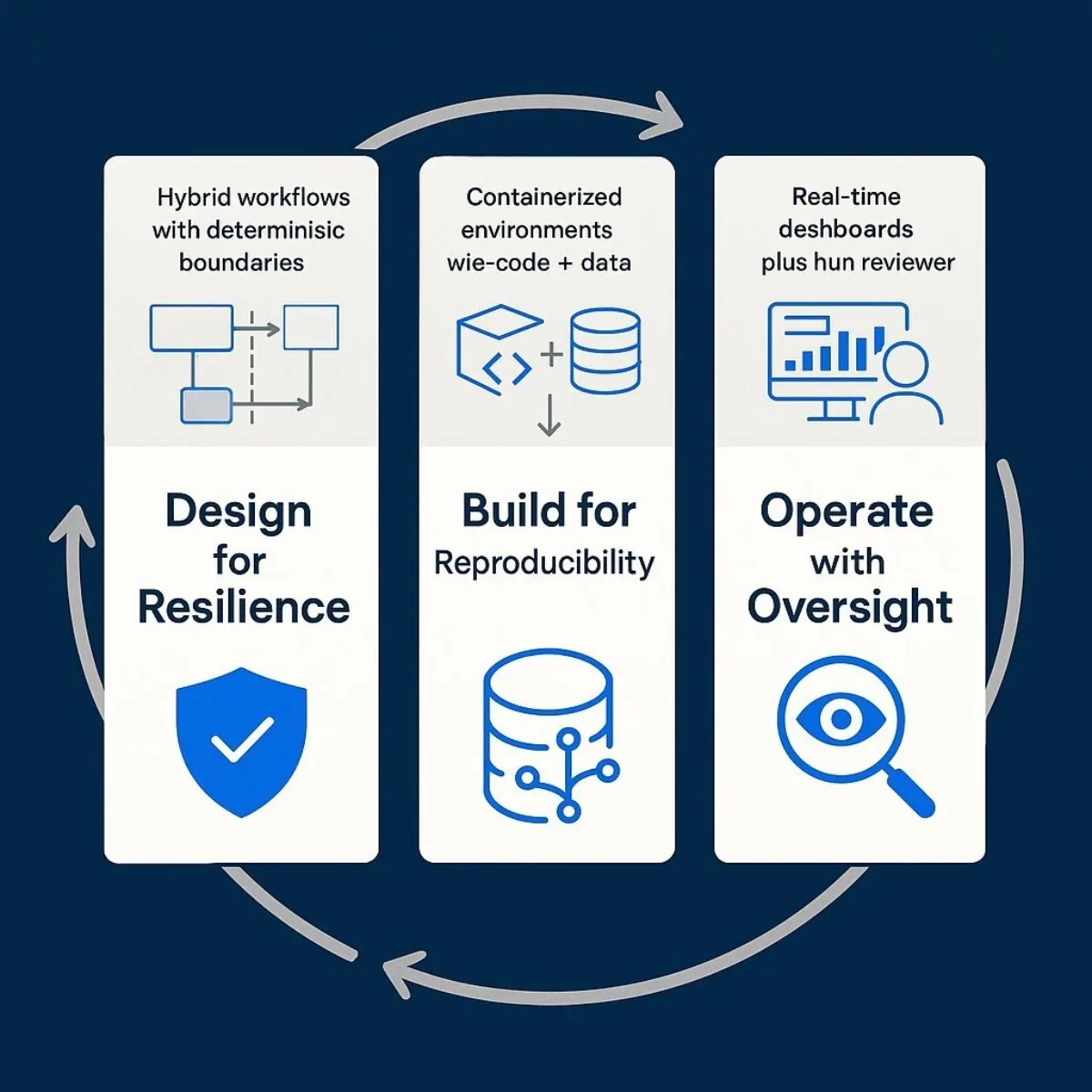

Taming the Chaos: A Framework for Production-Ready AI

While non-determinism is an inherent property of many powerful AI models, the risk it poses to a business is not. The key is to shift the enterprise mindset from expecting exact reproducibility to engineering for managed variability. This requires a holistic approach that builds resilience, reproducibility, and responsibility directly into the AI system. This framework rests on three reinforcing pillars: Design for Resilience, Build for Reproducibility, and Operate with Oversight.

Pillar 1: Design for Resilience - Architectural Guardrails

The first principle is to never deploy a raw AI model directly into a critical business process. Instead, a robust system must be built around the model. This involves creating hybrid architectures that strategically blend deterministic business logic with probabilistic AI capabilities, ensuring that the AI's creativity is channeled within safe, predictable boundaries.

Key architectural patterns for achieving this include:

- Controlled Flows & Prompt Chaining: For business processes that must follow a strict sequence (e.g., customer onboarding), the task should be broken down into a series of smaller, verifiable steps. The overall workflow remains deterministic and auditable, while LLMs can be invoked for specific, bounded tasks within each step, such as summarizing a document or extracting key information. This pattern ensures reliability without sacrificing the benefits of AI. Learn how AI-powered system design underpins such architectural decisions.

- LLM as a Router: In this pattern, an AI model acts as an intelligent triage system. It analyzes an incoming request (e.g., a customer email) and, based on its understanding of the user's intent, routes it to the appropriate deterministic workflow, a specialized AI agent, or a human expert. This is highly effective for managing diverse and unstructured inputs while maintaining structured, predictable processing paths.

- Robust Fallback Mechanisms: All systems fail; resilient systems fail gracefully. The architecture must include plans for what happens when the primary AI model produces a low-confidence answer, fails to respond, or generates an invalid output. The system should be designed to automatically fall back to a simpler, more deterministic model, a predefined rule-based system, or, for high-stakes scenarios, escalate the task to a human for review. This principle of graceful degradation prevents a single component failure from causing a catastrophic system breakdown. For a look at effective fallback patterns, see our business guide to Retrieval-Augmented Generation (RAG).

A "Governance-by-Design" philosophy embeds these patterns directly into the system architecture, often using enterprise-grade orchestration tools and policy-enforced API gateways to ensure that even the most creative AI operates within safe, auditable boundaries defined by business rules.

Pillar 2: Build for Reproducibility - The MLOps Foundation

An organization cannot manage what it cannot measure or reproduce. A disciplined Machine Learning Operations (MLOps) practice is the non-negotiable foundation for reliable AI in the enterprise. It provides the toolchain and processes necessary to track, version, stabilize, and audit the entire system from development through production.

Essential MLOps components for managing non-determinism include:

- Aggressive Version Control: Every component of the AI system must be meticulously versioned. This goes beyond just the application code to include the datasets used for training (using tools like Data Version Control - DVC), the specific model weights and artifacts, and the configuration files containing hyperparameters. This enables precise rollbacks and makes debugging unpredictable behavior possible.

- Environment Containerization: Tools like Docker and orchestration platforms like Kubernetes are critical for eliminating system-level randomness. They package the AI application, its dependencies, libraries, and configurations into a consistent, portable container. This ensures that the environment is identical across development, testing, and production, mitigating the "it worked on my machine" problem that can arise from subtle differences in hardware or software. For more on how modern teams use containers for robust delivery, check our .NET, Docker & Kubernetes services.

- Comprehensive Experiment Tracking: Every model training run is an experiment. Tools like MLflow or Weights & Biases must be used to automatically log every detail—the parameters used, the performance metrics achieved, and the resulting model artifacts. This creates an immutable, auditable trail that is essential for comparing model versions, diagnosing regressions, and satisfying compliance requirements. Read more about enterprise-grade data and ML platforms like Databricks that support MLOps best practices.

- Model Registry: A model registry acts as a centralized repository to store, version, and manage production-ready models. It ensures that only models that have passed rigorous validation and have been explicitly approved are deployed into production environments, providing a critical governance checkpoint.

Pillar 3: Operate with Oversight - Advanced Monitoring & Human-in-the-Loop

The deployment of an AI model is the beginning, not the end, of the quality assurance process. The paradigm must shift from traditional, pre-deployment testing to continuous, post-deployment evaluation. In production, systems must be monitored not just for technical failures like crashes, but for subtle behavioral drift and degradation in output quality.

Modern AI monitoring techniques include:

- Semantic and Behavioral Testing: Since exact output matches are not expected, testing must evolve. Instead of asserting

output == expected_output, evaluation should use metrics that measure semantic similarity. For example, cosine similarity can determine if two different text summaries convey the same meaning, or BLEU scores can evaluate the quality of machine-generated text against a human reference. For further insight, see our AI toolkit platform overview. - Performance and Cost Monitoring: Business-critical KPIs must be tracked continuously. These include latency (how long does the user wait for a response?), failure rates (how often does the model produce an error or low-quality output?), and token usage (for models priced per token, this is a direct cost driver).

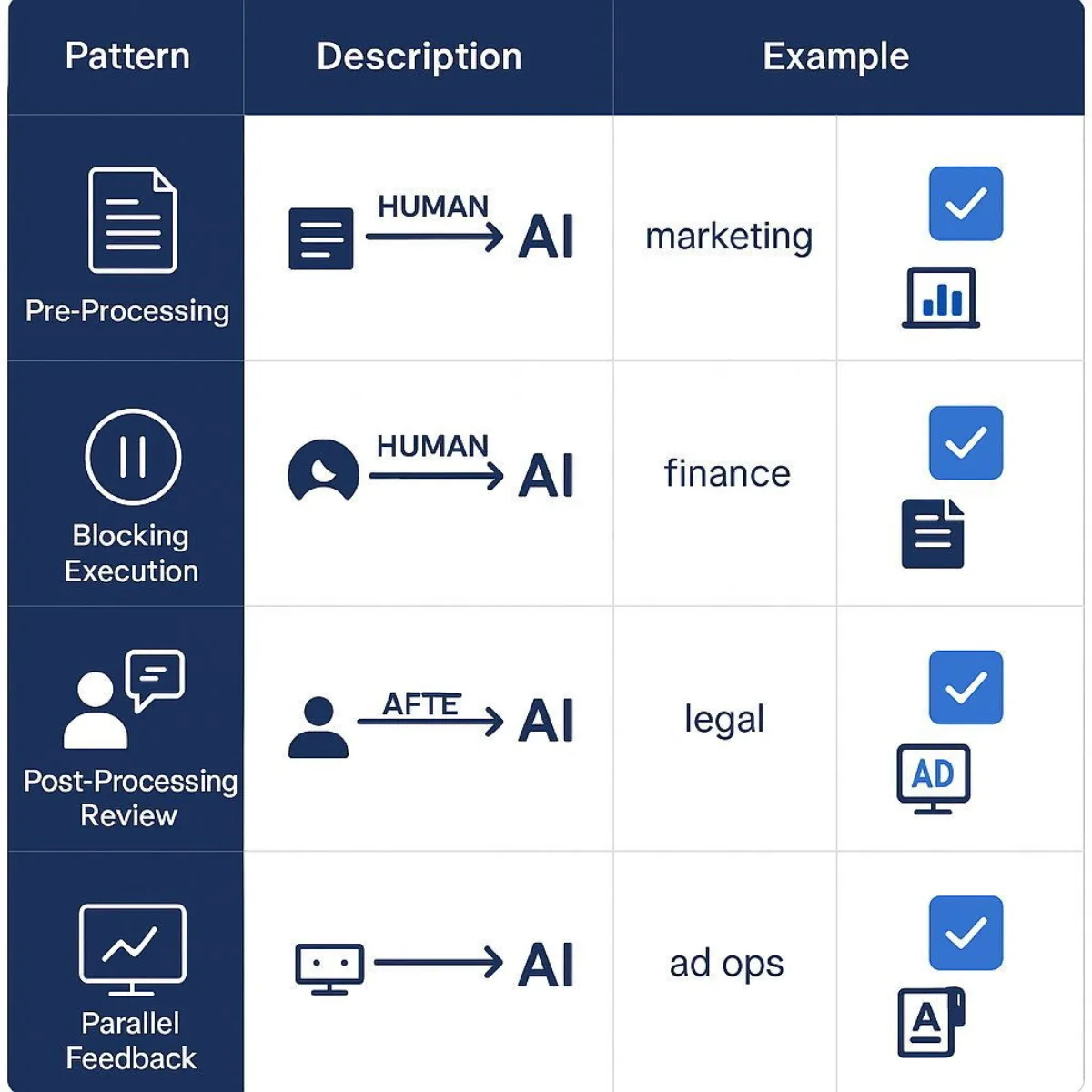

- Human-in-the-Loop (HITL) as a Strategic Control: HITL is the ultimate safety net and quality control mechanism. However, it is not a monolithic concept but rather a set of distinct architectural patterns that determine how and when to leverage human judgment. Choosing the right HITL strategy is a critical business decision that balances automation with risk. For practical patterns, see our executive guide to AI and LLMs.

These three pillars are not independent silos; they form a reinforcing feedback loop. A resilient, modular architecture (Pillar 1) is far easier to version and containerize (Pillar 2). A rigorously versioned and reproducible build (Pillar 2) is a prerequisite for conducting meaningful monitoring and A/B testing in production, as it allows teams to isolate variables and confidently attribute changes in behavior to specific modifications rather than random environmental drift. Finally, the data gathered from continuous monitoring and HITL interventions (Pillar 3) provides the most valuable source of information for identifying weaknesses and informing future architectural improvements (Pillar 1). For example, if a fallback to a human reviewer is triggered frequently for a certain type of query, it is a clear signal that the primary AI agent needs to be improved or that a new specialized workflow is required.

The following table outlines the primary HITL patterns and their ideal business applications.

Table 2: Choosing the Right Human-in-the-Loop (HITL) Strategy

| HITL Pattern | Description | Best For (Business Use Case) |

|---|---|---|

| Pre-Processing | Humans define constraints, provide examples, or label data before the AI acts. The AI's operational space is shaped by human input upfront. | Setting brand voice guidelines for a marketing content generator; defining ethical boundaries for a customer service bot. |

| Blocking Execution (Human-in-the-Loop) | The AI pauses mid-workflow and requires explicit human approval, clarification, or a decision before it can proceed. | Authorizing a high-value financial transaction; approving a critical infrastructure change proposed by an agent; confirming a medical diagnosis. |

| Post-Processing Review (Human-on-the-Loop) | The AI completes its task, but a human reviews and can edit or reject the output before it's finalized or delivered to the end-user. | Editing an AI-drafted legal clause; reviewing a summary report for accuracy before sending it to stakeholders; moderating user-generated content flagged by AI. |

| Parallel Feedback (Asynchronous Oversight) | The AI operates autonomously, but a human supervisor monitors its actions in parallel and can intervene or course-correct without stopping the entire process. | Monitoring a fleet of autonomous agents performing low-risk data analysis; supervising an AI that is dynamically optimizing ad spend within a set budget. |

Conclusion: Your Partner for Predictable Performance in an Unpredictable World

Non-determinism is an inherent feature of the powerful AI models that are reshaping industries. While this variability is the source of their creative and adaptive power, it also presents a fundamental challenge to enterprise standards of reliability, auditability, and trust. The associated business risks, however, are not inevitable. They are entirely manageable with the right expertise, architecture, and operational discipline.

The framework of Design for Resilience , Build for Reproducibility , and Operate with Oversight provides a strategic, holistic approach to taming this chaos. It moves beyond simply deploying a model and focuses on engineering a complete system that channels AI's probabilistic nature into predictable and valuable business outcomes. This requires building robust architectural guardrails, implementing a rigorous MLOps foundation, and establishing continuous monitoring with strategic human oversight.

The era of casual AI experimentation is over. To succeed in production, enterprises need a partner who understands how to build systems that are not only intelligent but also robust, reliable, and trustworthy. Navigating the predictability paradox is the central challenge of the current wave of AI adoption, and solving it is the key to unlocking its full potential and turning technological promise into predictable business performance.

About Baytech

At Baytech Consulting, we specialize in guiding businesses through this process, helping you build scalable, efficient, and high-performing software that evolves with your needs. Our MVP first approach helps our clients minimize upfront costs and maximize ROI. Ready to take the next step in your software development journey? Contact us today to learn how we can help you achieve your goals with a phased development approach.

About the Author

Bryan Reynolds is an accomplished technology executive with more than 25 years of experience leading innovation in the software industry. As the CEO and founder of Baytech Consulting, he has built a reputation for delivering custom software solutions that help businesses streamline operations, enhance customer experiences, and drive growth.

Bryan’s expertise spans custom software development, cloud infrastructure, artificial intelligence, and strategic business consulting, making him a trusted advisor and thought leader across a wide range of industries.